For training departments, the work shouldn’t end when the courses are designed, the content is built, the training is delivered, and training history is recorded. While you can’t blame anyone for feeling a sense of accomplishment after spending countless hours developing and delivering effective training programs, some of the most critical information has yet to be gathered at this point. Evaluating the effectiveness of training offerings is a critical step that can often be overlooked in favor of spending more money and time in investing in the next training program to be developed. This can be a critical mistake of training organizations as the opportunity cost of ineffective training can be severe in the long run.

SuccessFactors Learning provides training evaluation features that can help organizations quickly gather feedback from training participants and managers alike based on an established model of learning and training evaluation theory. Administrators in SuccessFactors Learning can build surveys to use for various evaluation purposes that can streamline the process of gathering evaluation results. Powerful reporting options are also available to analyze survey results quickly and efficiently to see what is working and what may not be. In this blog I want to highlight functionality within SuccessFactors Learning that organizations can leverage to ensure they efficiently evaluate the performance of their training programs and how effectively knowledge from training is being applied by training participants.

The SuccessFactors Learning Training Evaluation Model

Training evaluation within SuccessFactors Learning is based off of an established training evaluation model from Donald Kirkpatrick. The Kirkpatrick model introduces a 4-level approach to training evaluation, where evaluation should begin at the first level and proceed through the higher levels as needed (and within time and budget constraints). Details on the model are illustrated below.

- Level 1 – Reactions – Initial feedback on the learner’s impressions of the training

- Level 2 – Learning – Measurement of the increase in knowledge or capability gained by the learner as a result of the training

- Level 3 – Behavior/Transfer – Extent of applied learning by the participant on the job after a period of time from the completion of training

- Level 4 – Results – Effects on the business/environment by the learner as a result of their performance

Implementing the Training Evaluation Model

SuccessFactors Learning provides several tools to implement this training evaluation model. Related to the levels of the Kirkpatrick model, the following tools are available.

| Model Level | SF Learning Tool |

| 1 – Reactions | Item Evaluation Survey |

| 2 – Learning | Exams (Pre/Post Exams) |

| 3 – Behavior/Transfer | Follow-Up Evaluation Survey |

For the purposes of this blog, I am going to cover the survey specific tools within the LMS that support Level 1 and 3 of the training evaluation model. Exams can be created within the LMS and assigned for pre/post assessments of training participants (Level 2 – maybe the topic of a future blog). I will mention reporting toward the end of this blog that is available for survey analysis.

Item Evaluation Surveys

Item Evaluation Surveys within SuccessFactors Learning are used to gather Level 1 (Reaction) feedback from training participants. These are the types of evaluations that most organizations already do to some extent because they are a quick and inexpensive way to gather initial feedback and evaluate the impressions of learners immediately after they complete training. While you do not want to over complicate these types of surveys, thought should be given toward the questions that are presented and the feedback they intended to gather. Typical questions can center around relevance of content, ability to maintain interest, and instructor feedback, among others.

Within SuccessFactors Learning, administrators have the capability of building evaluation surveys directly in the LMS and assigning them to Items accordingly. Administrators can access this tool via the path in the Learning Administration page for Learning->Questionnaire Surveys. Surveys can be structured in multiple pages with multiple questions per page in order to organize common feedback question groups (i.e. – Course Feedback, Instructor Feedback, etc.). The interface to create questionnaires is quite intuitive and not hard to understand, allowing an admin to create pages and questions with relative ease.

Questions created within a survey can be of four (4) defined types, described below. An screen shot of the survey builder within the LMS is provided as well.

- Rating Scale – Responses based on a defined (configured) rating scale within the LMS (use to gather quantitative results)

- One Choice – Responses based on a defined list of possible responses where the user can select only one as an answer

- Multiple Choice – Responses based on a defined list of possible responses where the user can select multiple answers from the group

- Open Ended – Free-text response from user (up to 3,990 characters)

Various options can be specified on an Item Evaluation Survey to dictate how the survey will act when assigned to a User via an Item. As illustrated in the screen shot below, within the Options area of the Survey administration page, an admin can specify surveys to be anonymous, be required for item completion (i.e. – user not credited training until survey is complete), the number of days allowed to complete, and whether or not to include a comments field for each question.

After completing the setup of a questionnaire survey, the survey must be set to Active and Published for use. An admin can preview the survey at any time to see how the survey will look to users when they are completing, as illustrated by the screen shot below.

Once a survey is setup and published for use, it must be assigned to Items for use and assignment as users complete learning items within the LMS. Item assignment for surveys can be performed in the Survey administration pages. In addition, within the Item administration pages, Evaluation settings for an Item can be found under the Related (More)-> Evaluations area. Defaults for Days to Complete and whether the survey must be completed in order for the Item completion to be recorded can be overwritten at the Item assignment level as illustrated in the screen shot below.

Follow-Up Evaluations

Follow-Up Evaluations within SuccessFactors Learning are used to gather Level 3 (Behavior/Transfer) feedback from training participants and/or managers. It is this level of training evaluation that most training organizations struggle with or do not attempt to gather at all. The challenge of these evaluations is they should happen after a set period of time from the learner’s completion of the training. Coordinating the completion of these evaluations is difficult, plus structuring these evaluations to ask the appropriate questions can be a challenge as well. If done appropriately though, the feedback from these evaluations can be critical to assessing the overall effectiveness of training that everyone wants to be able to determine (whether or not learners’ behaviors are modified as a result of the learning itself).

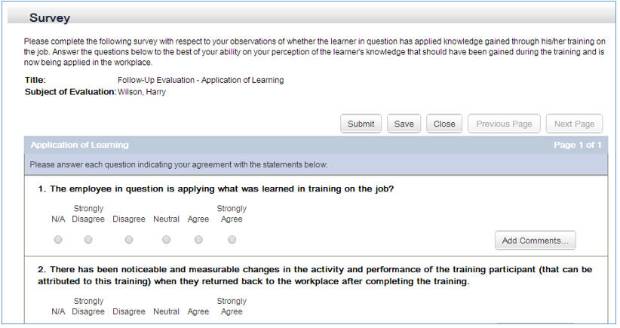

Typically Follow-Up Evaluations should be performed three (3) to six (6) months after completion of the training, allowing enough time to pass for the learner to apply the new skills obtained within the training on the job. SuccessFactors allows this type of evaluation to be sent to and completed by either the user or the manager (or both).

I previously described the tools available in SuccessFactors Learning for creating Item Level Evaluations via Questionnaire Surveys in the LMS. Functionally there is no major difference in how these two types of surveys are created via the survey tool. The surveys do, however, serve different purposes as previously discussed, which should result in the types of questions found in the evaluations to be quite different. Questions on a follow-up survey should be directed more toward measurable or perceived evaluation of performance of the individual for criteria directly related to the training program completed.

Options that can be specified on Follow-Up Evaluation surveys differ slightly from Item Level Evaluation surveys. As illustrated in the screen shot below, an admin can specify the amount of days the survey should be assigned after completion of the training, number of days to complete the survey, who the participants in the survey should be (employee, supervisor, or both), and whether comment fields should be supplied for each question (illustrated in the screen shot below).

Similar to Item Level Evaluation surveys, Follow-Up Surveys must be assigned to applicable Items that should require this type of evaluation. Assignments can be made within the Survey administration pages as well as the Item administration area. Within the Item administration pages, Evaluation settings for an Item can be found under the Related (More)-> Evaluations area. Defaults for types of participants and the amount of days before assignment and allowed amount of days to complete can be overwritten at the Item assignment level, as shown in the screen shot below.

Assignment of Evaluations to Users

While evaluations must be assigned to an Item in order to potentially be assigned to users, SuccessFactors Learning ultimately determines whether or not to assign an evaluation to a user from the Completion Status that a user achieves when completing a learning item within the LMS. Configuration at the Completion Status level can allow administrators to specify what statuses should result in evaluations being assigned to users (assuming the Item has evaluation(s) related to it). For instance, certain completion statuses may be eligible for both Item Level and Follow-Up Evaluations whenever a user completes an item under that status (i.e. – ‘Course Passed’). However, other statuses may not be eligible a Follow-Up evaluation (i.e. – ‘Completed Briefing’), and some statuses should not require evaluations at all (i.e. – ‘Incomplete’ or ‘Substitute Credit Given’). As illustrated below, the configuration specified when creating Completion Statuses in the LMS (References->Learning->Completion Status) will determine when evaluations should be assigned as users complete learning items and are assigned a completion status. To reiterate though, the Items being recorded for learning history with these Completion Statuses must have evaluations assigned to them in order for anything to be assigned to a user. If the item has no evaluation associated with it, no assignment will be made even if the Completion Status indicates the item is eligible for an Item Level and/or Follow-Up Evaluation.

After the desired Item and Follow-Up surveys have been created, published, and assigned to the desired Items within the LMS, and the appropriate Completion Statuses are configured to include the desired evaluations, the surveys can be assigned to users appropriately. Item Level Evaluation surveys are assigned to users upon completion of the item (i.e. – the learning event is recorded). Follow-Up Evaluation surveys require an APM (Automatic Process Module) to be setup in order to synchronize evaluations and make assignments to users after they complete training and follow-up evaluation periods are realized. This APM can be setup under System Admin->Automatic Processes->Evaluation Synchronization. Running this process nightly should be sufficient for managing Follow-Up evaluation assignments. As assignments are made to users, notification emails will be sent to users to let them know of the survey feedback they should provide.

User Access to Training Evaluations

Users can access assigned training evaluations from their Learning home page within the My Learning Assignments tile. Assignments here will be sorted by due dates like all other learning assignments. Due dates for evaluations are calculated from the options previously discussed for the amount of allowed days to complete from time of training completion. Surveys will not be removed from a user’s learning assignments after the amount of days to complete has expired, but they will show as overdue until completed or removed.

Evaluations shown pending completion will display what Item (i.e. – course) the evaluation is for (Item Level Evaluation). If the evaluation is a Follow-Up Evaluation, the name of the user the evaluation is in relation to will also be displayed. The user can either open to survey to complete or remove it from their list without completing. A user can fill out the survey, save progress for later completion, and submit when done. The screen shot below illustrates what the survey looks like when displayed to the user to complete.

Survey Evaluation Analysis Reports

Various reports are available within the LMS to admins to analyze completed evaluations. The following list summarizes these reports.

- Item Evaluation Report – calculates and displays the mean score for each survey, survey page, and survey question, including the percentage of users who selected each individual response.

- Note – ‘Mean Score’ is calculated for questions of the Rating Scale type. Average responses based on the scale are determined and displayed.

- Item Evaluation by Individual Response – detailed level report by user to show individual responses to survey questions.

- Item Evaluation by Instructor – calculates and displays the mean score for each survey and survey page grouped according to instructor.

- Great way to compare an item’s average evaluation score across multiple instructors who teach the course.

- Note – ‘Mean Score’ is calculated for questions of the Rating Scale type. Average responses based on the scale are determined and displayed.

- Follow-Up Evaluation Report – calculates and displays the mean score for each survey, survey page, and survey question.

- Follow-Up Evaluation by Individual Response – calculates the mean score for each follow-up survey and survey page by rater (i.e. – Employee/Manager)